SECOND点云检测代码详解 |

您所在的位置:网站首页 › second 网络 › SECOND点云检测代码详解 |

SECOND点云检测代码详解

|

1、前言

SECOND也是一片基于Voxel按anchor-based的点云检测方法,网络的整体结构和实现大部分与原先VoxelNet相近,同时在VoxelNet的基础上改进了中间层的3D卷积,采用稀疏卷积来完成,提高了训练的效率和网络推理的速度,同时解决了VoxelNet中角度预测中,因为物体完全反向和产生很大loss的情况;同时,SECOND还提出了GT_Aug的点云数据增强。没有了解过VoxelNet的小伙伴可以查看我的这篇文章: VoxelNet点云检测详解_NNNNNathan的博客-CSDN博客_voxelnet 点云 目标检测1、前言 精确的点云检测在很多三维场景的应用中都是十分重要的一环,比如家用机机器人、无人驾驶汽车等场景。然而高效且准确的点云检测在pointnet网络提出之前,一直没能取得很好的进展,因为传统的手工点云特征提取没有很好的泛化性能。所以VoxelNet是一个端到端的点云检测模型。直接使用深度学习完成对点云的特征提取;同时,这也使得网络更加高效。这个模型在当时的KITTI点云检测中也取得了SOTA的成绩。VoxelNet: End-to-End Learning for Point Clo... SECOND论文地址:Sensors | Free Full-Text | SECOND: Sparsely Embedded Convolutional Detection SECOND代码地址:GitHub - traveller59/second.pytorch: SECOND for KITTI/NuScenes object detection 本文的代码解析将会根据OpenPCDet的实现来进行,期间异同会说明: GitHub - open-mmlab/OpenPCDet: OpenPCDet Toolbox for LiDAR-based 3D Object Detection. 我的注释代码仓库: GitHub - Nathansong/OpenPCDdet-annotated: OpenPCDdet模型代码解析 2、SECOND网络模块解析SECOND(Sparsely Embedded CONvolutional Detection)网络整体架构(图来自原论文)

SECOND与VoxelNet网络结构异同(图来自【3D目标检测】SECOND算法解析 - 知乎) 注:VoxelNet中的点云特征提取VFE模块在作者最新的实现中已经被替换;因为原来的VFE操作速度太慢,并且对显存不友好。具体可以查看这个issue: https://github.com/traveller59/second.pytorch/issues/153 SECOND在PCDet中的代码实现类结构图:

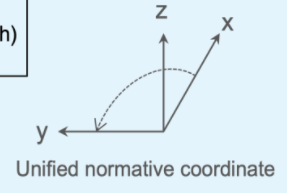

1、MeanVFE (voxel特征编码) 2、VoxelBackBone8x (中间卷积层,此处为3D稀疏卷积) 3、HeightCompression (Z轴方向压缩) 4、BaseBEVBackbone (BEV视角下 2D卷积特征提取) 5、AnchorHeadSingle (anchor分类和box预测) 3、VFE(Voxel Feature Encoding) 3.1 Point Cloud Grouping在最先的SECOND中,将点云变成Voxel的方法和VoxelNet中一样,首先创建一个最大存储N个voxel的buffer,并迭代整个点云来分配他们所在的voxe,同时存储这个voxel在voxel坐标系中的坐标和每个voxel中有多少个点云数据(详细可以参看我VoxelNet中2.1.5节,高效实现部分)。 在最新的是实现中,采用了稀疏卷积来进行完成 。 经过对点云数据进行Grouping操作后得到三份数据: 1、得到所有的voxel shape为(N, 5 , 4) ; 5为每个voxel最大的点数,4为每个point的数据 (x,y,z,reflect intensity) 2、得到每个voxel的位置坐标 shape(N, 3) 3、得到每个voxel中有多少个非空点 shape (N) 注: 1.原文中分别对车、自行车和行人使用了不同的网络结构,PCDet仅使用一种结构训练三个类别。 2.在kitti数据集的实现中,点云的范围为[0, -40, -3, 70.4, 40, 1],超出部分会被裁剪, 此处以OpenPCDet中的统一规范坐标为准,x向前,y向左,z向上,旋转角从x到y逆时针为正。 3.原论文中的每个voxel的长宽高为0.2,0.2,0.4且每个voxel中采样35个点,在PCDet的实现中每个voxel的长宽0.05米,高0.1米且每个voxel采样5个点;同时在Grouping的过程中,一个voxel中点的数量不足5个的话,用0填充至5个。 3.N为非空voxel的最大个数,训练过程中N取16000,推理时取40000。 代码在:pcdet/datasets/processor/data_processor.py def transform_points_to_voxels(self, data_dict=None, config=None): """ 将点云转换为voxel,调用spconv的VoxelGeneratorV2 """ if data_dict is None: grid_size = (self.point_cloud_range[3:6] - self.point_cloud_range[0:3]) / np.array(config.VOXEL_SIZE) self.grid_size = np.round(grid_size).astype(np.int64) self.voxel_size = config.VOXEL_SIZE # just bind the config, we will create the VoxelGeneratorWrapper later, # to avoid pickling issues in multiprocess spawn return partial(self.transform_points_to_voxels, config=config) if self.voxel_generator is None: self.voxel_generator = VoxelGeneratorWrapper( # 给定每个voxel的长宽高 [0.05, 0.05, 0.1] vsize_xyz=config.VOXEL_SIZE, # [0.16, 0.16, 4] # 给定点云的范围 [ 0. -40. -3. 70.4 40. 1. ] coors_range_xyz=self.point_cloud_range, # 给定每个点云的特征维度,这里是x,y,z,r 其中r是激光雷达反射强度 num_point_features=self.num_point_features, # 给定每个pillar中有采样多少个点,不够则补0 max_num_points_per_voxel=config.MAX_POINTS_PER_VOXEL, # 32 # 最多选取多少个voxel,训练16000,推理40000 max_num_voxels=config.MAX_NUMBER_OF_VOXELS[self.mode], # 16000 ) # 使用spconv生成voxel输出 points = data_dict['points'] voxel_output = self.voxel_generator.generate(points) # 假设一份点云数据是N*4,那么经过pillar生成后会得到三份数据 # voxels代表了每个生成的voxel数据,维度是[M, 5, 4] # coordinates代表了每个生成的voxel所在的zyx轴坐标,维度是[M,3] # num_points代表了每个生成的voxel中有多少个有效的点维度是[m,],因为不满5会被0填充 voxels, coordinates, num_points = voxel_output # False if not data_dict['use_lead_xyz']: voxels = voxels[..., 3:] # remove xyz in voxels(N, 3) data_dict['voxels'] = voxels data_dict['voxel_coords'] = coordinates data_dict['voxel_num_points'] = num_points return data_dict其中VoxelGeneratorWrapper在pcdet/datasets/processor/data_processor.py。 3.2 Mean VFE在得到Voxel和每个Voxel对应的coordinate后,此处的VFE方式稍有变化,原因已写在上面的issue中。 原文:在原论文的实现中,VFE模块是和VoxelNet中一样的,详情可以看我voxelnet的 2.1.3节VFE堆叠(Stacked Voxel Feature Encoding)。 新实现:在新的实现中,去掉了原来Stacked Voxel Feature Encoding,直接计算每个voxel内点的平均值,当成这个voxel的特征;大幅提高了计算的速度,并且也取得了不错的检测效果。得到voxel特征的维度变换为: (Batch*16000, 5, 4) --> (Batch*16000, 4) 代码在:pcdet/models/backbones_3d/vfe/mean_vfe.py class MeanVFE(VFETemplate): def __init__(self, model_cfg, num_point_features, **kwargs): super().__init__(model_cfg=model_cfg) # 每个点多少个特征(x,y,z,r) self.num_point_features = num_point_features def get_output_feature_dim(self): return self.num_point_features def forward(self, batch_dict, **kwargs): """ Args: batch_dict: voxels: (num_voxels, max_points_per_voxel, C) voxel_num_points: optional (num_voxels) how many points in a voxel **kwargs: Returns: vfe_features: (num_voxels, C) """ # here use the mean_vfe module to substitute for the original pointnet extractor architecture voxel_features, voxel_num_points = batch_dict['voxels'], batch_dict['voxel_num_points'] # 求每个voxel内 所有点的和 # eg:SECOND shape (Batch*16000, 5, 4) -> (Batch*16000, 4) points_mean = voxel_features[:, :, :].sum(dim=1, keepdim=False) # 正则化项, 保证每个voxel中最少有一个点,防止除0 normalizer = torch.clamp_min(voxel_num_points.view(-1, 1), min=1.0).type_as(voxel_features) # 求每个voxel内点坐标的平均值 points_mean = points_mean / normalizer # 将处理好的voxel_feature信息重新加入batch_dict中 batch_dict['voxel_features'] = points_mean.contiguous() return batch_dict 4、VoxelBackBone8x在VoxelNet中,对voxel进行特征提取采取的是3D卷积的操作,但是3D卷积由于计算量太大,并且消耗的计算资源太多;作者对其进行了改进。 首先稀疏卷积的概念最早由facebook开源且使用在2D手写数字识别上的,因为其特殊的映射规则,其卷积速度比普通的卷积快,所以,作者在这里想到了用常规稀疏卷积的替代方法,submanifold卷积; submanifold卷积将输出位置限制为在且仅当相应的输入位置处于活动状态时才处于活动状态。 这避免了太多的激活位置的产生,从而导致后续卷积层中速度的降低。 作者经过自己的改进,使用了新的稀疏卷积方法,详情可以看这个知乎 【3D目标检测】SECOND算法解析 - 知乎 这部分内容由于涉及了多个稀疏卷积的内容,包括了作者提出的3D稀疏卷积和submanifold卷积,这个坑以后再填,先让我们看代码实现。

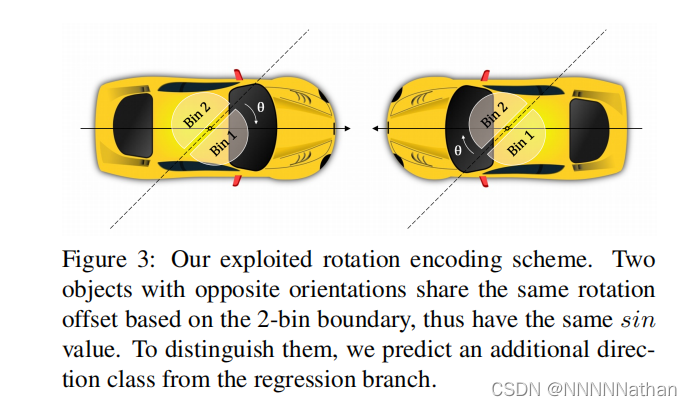

SECOND中的中间特征提取层,黄色代表作者自己提出的稀疏卷积,白色代表submanifold卷积,红色代表sparse-to-dense层。其中上方的数字为稀疏数据的空间大小(代码实现中的尺度与该图中有不同)。 代码在:pcdet/models/backbones_3d/spconv_backbone.py class VoxelBackBone8x(nn.Module): def __init__(self, model_cfg, input_channels, grid_size, **kwargs): super().__init__() self.model_cfg = model_cfg norm_fn = partial(nn.BatchNorm1d, eps=1e-3, momentum=0.01) self.sparse_shape = grid_size[::-1] + [1, 0, 0] self.conv_input = spconv.SparseSequential( spconv.SubMConv3d(input_channels, 16, 3, padding=1, bias=False, indice_key='subm1'), norm_fn(16), nn.ReLU(), ) block = post_act_block self.conv1 = spconv.SparseSequential( block(16, 16, 3, norm_fn=norm_fn, padding=1, indice_key='subm1'), ) self.conv2 = spconv.SparseSequential( # [1600, 1408, 41] [batch_size, 16, [41, 1600, 1408]] x_conv1 = self.conv1(x) # [batch_size, 16, [41, 1600, 1408]] --> [batch_size, 32, [21, 800, 704]] x_conv2 = self.conv2(x_conv1) # [batch_size, 32, [21, 800, 704]] --> [batch_size, 64, [11, 400, 352]] x_conv3 = self.conv3(x_conv2) # [batch_size, 64, [11, 400, 352]] --> [batch_size, 64, [5, 200, 176]] x_conv4 = self.conv4(x_conv3) # for detection head # [200, 176, 5] -> [200, 176, 2] # [batch_size, 64, [5, 200, 176]] --> [batch_size, 128, [2, 200, 176]] out = self.conv_out(x_conv4) batch_dict.update({ 'encoded_spconv_tensor': out, 'encoded_spconv_tensor_stride': 8 }) batch_dict.update({ 'multi_scale_3d_features': { 'x_conv1': x_conv1, 'x_conv2': x_conv2, 'x_conv3': x_conv3, 'x_conv4': x_conv4, } }) batch_dict.update({ 'multi_scale_3d_strides': { 'x_conv1': 1, 'x_conv2': 2, 'x_conv3': 4, 'x_conv4': 8, } }) return batch_dict其中block为稀疏卷积构建: def post_act_block(in_channels, out_channels, kernel_size, indice_key=None, stride=1, padding=0, conv_type='subm', norm_fn=None): # 后处理执行块,根据conv_type选择对应的卷积操作并和norm与激活函数封装为块 if conv_type == 'subm': conv = spconv.SubMConv3d(in_channels, out_channels, kernel_size, bias=False, indice_key=indice_key) elif conv_type == 'spconv': conv = spconv.SparseConv3d(in_channels, out_channels, kernel_size, stride=stride, padding=padding, bias=False, indice_key=indice_key) elif conv_type == 'inverseconv': conv = spconv.SparseInverseConv3d(in_channels, out_channels, kernel_size, indice_key=indice_key, bias=False) else: raise NotImplementedError m = spconv.SparseSequential( conv, norm_fn(out_channels), nn.ReLU(), ) return m 5、HeightCompression (Z轴方向压缩)由于前面VoxelBackBone8x得到的tensor是稀疏tensor,数据为: [batch_size, 128, [2, 200, 176]] 这里需要将原来的稀疏数据转换为密集数据;同时将得到的密集数据在Z轴方向上进行堆叠,因为在KITTI数据集中,没有物体会在Z轴上重合;同时这样做的好处有: 1.简化了网络检测头的设计难度 2.增加了高度方向上的感受野 3.加快了网络的训练、推理速度 最终得到的BEV特征图为:(batch_size, 128*2, 200, 176) ,这样就可以将图片的检测思路运用进来了。 代码在pcdet/models/backbones_2d/map_to_bev/height_compression.py # 在高度方向上进行压缩 class HeightCompression(nn.Module): def __init__(self, model_cfg, **kwargs): super().__init__() self.model_cfg = model_cfg # 高度的特征数 self.num_bev_features = self.model_cfg.NUM_BEV_FEATURES def forward(self, batch_dict): """ Args: batch_dict: encoded_spconv_tensor: sparse tensor Returns: batch_dict: spatial_features: """ # 得到VoxelBackBone8x的输出特征 encoded_spconv_tensor = batch_dict['encoded_spconv_tensor'] # 将稀疏的tensor转化为密集tensor,[bacth_size, 128, 2, 200, 176] # 结合batch,spatial_shape、indice和feature将特征还原到密集tensor中对应位置 spatial_features = encoded_spconv_tensor.dense() # batch_size,128,2,200,176 N, C, D, H, W = spatial_features.shape """ 将密集的3D tensor reshape为2D鸟瞰图特征 将两个深度方向内的voxel特征拼接成一个 shape : (batch_size, 256, 200, 176) z轴方向上没有物体会堆叠在一起,这样做可以增大Z轴的感受野, 同时加快网络的速度,减小后期检测头的设计难度 """ spatial_features = spatial_features.view(N, C * D, H, W) # 将特征和采样尺度加入batch_dict batch_dict['spatial_features'] = spatial_features # 特征图的下采样倍数 8倍 batch_dict['spatial_features_stride'] = batch_dict['encoded_spconv_tensor_stride'] return batch_dict 6、BaseBEVBackbone在获得类图片的特征数据后,需要在对该特征在BEV的视角上进行特征提取。这里采用了和VoxelNet类是的网络结构;分别对特征图进行不同尺度的下采样然后再进行上采用后在通道维度进行拼接。 SECOND中存在两个下采样分支结构,则对应存在两个反卷积结构: 经过HeightCompression得到的BEV特征图是:(batch_size, 128*2, 200, 176) 下采样分支一:(batch_size, 128*2, 200, 176) --> (batch,128, 200, 176) 下采样分支二:(batch_size, 128*2, 200, 176) --> (batch,128, 200, 176) 反卷积分支一:(batch, 128, 200, 176) --> (batch, 256, 200, 176) 反卷积分支二:(batch, 256, 100, 88) --> (batch, 256, 200, 176) 最终将结构在通道维度上进行拼接的特征图维度:(batch, 256 * 2, 200, 176) 代码在:pcdet/models/backbones_2d/base_bev_backbone.py def forward(self, data_dict): """ Args: data_dict: spatial_features : (4, 64, 496, 432) Returns: """ spatial_features = data_dict['spatial_features'] ups = [] ret_dict = {} x = spatial_features # 对不同的分支部分分别进行conv和deconv的操作 for i in range(len(self.blocks)): """ SECOND中一共存在两个下采样分支, 分支一: (batch,128,200,176) 分支二: (batch,256,100,88) """ x = self.blocks[i](x) stride = int(spatial_features.shape[2] / x.shape[2]) ret_dict['spatial_features_%dx' % stride] = x # 如果存在deconv,则对经过conv的结果进行反卷积操作 """ SECOND中存在两个下采样,则分别对两个下采样分支进行反卷积操作 分支一: (batch,128,200,176)-->(batch,256,200,176) 分支二: (batch,256,100,88)-->(batch,256,200,176) """ if len(self.deblocks) > 0: ups.append(self.deblocks[i](x)) else: ups.append(x) # 将上采样结果在通道维度拼接 if len(ups) > 1: """ 最终经过所有上采样层得到的2个尺度的的信息 每个尺度的 shape 都是 (batch,256,200,176) 在第一个维度上进行拼接得到x 维度是 (batch,512,200,176) """ x = torch.cat(ups, dim=1) elif len(ups) == 1: x = ups[0] # Fasle if len(self.deblocks) > len(self.blocks): x = self.deblocks[-1](x) # 将结果存储在spatial_features_2d中并返回 data_dict['spatial_features_2d'] = x return data_dict 7、AnchorHeadSingle经过BaseBEVBackbone后得到的特征图为(batch, 256 * 2, 200, 176);在SECOND中,作者提出了方向分类,将原来VoxelNet的两个预测头上增加了一个方向分类头,来解决角度训练过程中一个预测的结果与GTBox的方向相反导致大loss的情况。 检测头图:

每个头分别采用了1*1的卷积来进行预测。 7.1 anchor生成由于在3D世界中,每个类别的物体大小相对固定,所以直接使用了基于KITTI数据集上每个类别的平均长宽高作为anchor大小,同时每个类别的anchor都有两个方向角为0度和90度。 anchor的类别尺度大小(单位:米): 分别是车 [3.9, 1.6, 1.56],anchor的中心在Z轴的-1米、 人[0.8, 0.6, 1.73],anchor的中心在Z轴的-0.6米、 自行车[1.76, 0.6, 1.73],anchor的中心在Z轴的-0.6米 每个anchro都有被指定两个个one-hot向量,一个用于方向分类,一个用于类别分类;还被指定一个7维的向量用于anchor box的回归,分别是(x, y, z, l, w, h, θ)其中θ为PCDet坐标系下物体的朝向信息。 最终可以得到3个类别的anchor,维度都是[z, y, x, num_size, num_rot, 7],其中num_size是每个类别有几个尺度(1个);num_rot为每个anchor有几个方向类别(2个);7维向量表示为 [x, y, z, dx, dy, dz, rot](每个anchor box的信息)。 代码在:pcdet/models/dense_heads/target_assigner/anchor_generator.py 注:SECOND的特征图尺度大小为 宽200, 长176,下面注释中需要将所有216替换为176,248替换为200即可。同时点云的尺度信息是[0, -40, -3, 70.4, 40, 1]。 class AnchorGenerator(object): def __init__(self, anchor_range, anchor_generator_config): super().__init__() self.anchor_generator_cfg = anchor_generator_config # list:3 # 得到anchor在点云中的分布范围[0, -39.68, -3, 69.12, 39.68, 1] self.anchor_range = anchor_range # 得到配置参数中所有尺度anchor的长宽高 # list:3 --> 车、人、自行车[[[3.9, 1.6, 1.56]],[[0.8, 0.6, 1.73]],[[1.76, 0.6, 1.73]]] self.anchor_sizes = [config['anchor_sizes'] for config in anchor_generator_config] # 得到anchor的旋转角度,这是是弧度,也就是0度和90度 # list:3 --> [[0, 1.57],[0, 1.57],[0, 1.57]] self.anchor_rotations = [config['anchor_rotations'] for config in anchor_generator_config] # 得到每个anchor初始化在点云中z轴的位置,其中在kitti中点云的z轴范围是-3米到1米 # list:3 --> [[-1.78],[-0.6],[-0.6]] self.anchor_heights = [config['anchor_bottom_heights'] for config in anchor_generator_config] # 每个先验框产生的时候是否需要在每个格子的中间, # 例如坐标点为[1,1],如果需要对齐中心点的话,需要加上0.5变成[1.5, 1.5] # 默认为False # list:3 --> [False, False, False] self.align_center = [config.get('align_center', False) for config in anchor_generator_config] assert len(self.anchor_sizes) == len(self.anchor_rotations) == len(self.anchor_heights) self.num_of_anchor_sets = len(self.anchor_sizes) # 3 def generate_anchors(self, grid_sizes): assert len(grid_sizes) == self.num_of_anchor_sets # 1.初始化 all_anchors = [] num_anchors_per_location = [] # 2.三个类别的先验框逐类别生成 for grid_size, anchor_size, anchor_rotation, anchor_height, align_center in zip( grid_sizes, self.anchor_sizes, self.anchor_rotations, self.anchor_heights, self.align_center): # 2 = 2x1x1 --> 每个位置产生2个anchor,这里的2代表两个方向 num_anchors_per_location.append(len(anchor_rotation) * len(anchor_size) * len(anchor_height)) # 不需要对齐中心点来生成先验框 if align_center: x_stride = (self.anchor_range[3] - self.anchor_range[0]) / grid_size[0] y_stride = (self.anchor_range[4] - self.anchor_range[1]) / grid_size[1] # 中心对齐,平移半个网格 x_offset, y_offset = x_stride / 2, y_stride / 2 else: # 2.1计算每个网格的在点云空间中的实际大小 # 用于将每个anchor映射回实际点云中的大小 # (69.12 - 0) / (216 - 1) = 0.3214883848678234 单位:米 x_stride = (self.anchor_range[3] - self.anchor_range[0]) / (grid_size[0] - 1) # (39.68 - (-39.68.)) / (248 - 1) = 0.3212955490297634 单位:米 y_stride = (self.anchor_range[4] - self.anchor_range[1]) / (grid_size[1] - 1) # 由于没有进行中心对齐,所有每个点相对于左上角坐标的偏移量都是0 x_offset, y_offset = 0, 0 # 2.2 生成单个维度x_shifts,y_shifts和z_shifts # 以x_stride为step,在self.anchor_range[0] + x_offset和self.anchor_range[3] + 1e-5, # 产生x坐标 --> 216个点 [0, 69.12] x_shifts = torch.arange( self.anchor_range[0] + x_offset, self.anchor_range[3] + 1e-5, step=x_stride, dtype=torch.float32, ).cuda() # 产生y坐标 --> 248个点 [0, 79.36] y_shifts = torch.arange( self.anchor_range[1] + y_offset, self.anchor_range[4] + 1e-5, step=y_stride, dtype=torch.float32, ).cuda() """ new_tensor函数可以返回一个新的张量数据,该张量数据与指定的有相同的属性 如拥有相同的数据类型和张量所在的设备情况等属性; 并使用anchor_height数值个来填充这个张量 """ # [-1.78] z_shifts = x_shifts.new_tensor(anchor_height) # num_anchor_size = 1 # num_anchor_rotation = 2 num_anchor_size, num_anchor_rotation = anchor_size.__len__(), anchor_rotation.__len__() # 1, 2 # [0, 1.57] 弧度制 anchor_rotation = x_shifts.new_tensor(anchor_rotation) # [[3.9, 1.6, 1.56]] anchor_size = x_shifts.new_tensor(anchor_size) # 2.3 调用meshgrid生成网格坐标 x_shifts, y_shifts, z_shifts = torch.meshgrid([ x_shifts, y_shifts, z_shifts ]) # meshgrid可以理解为在原来的维度上进行扩展,例如: # x原来为(216,)-->(216,1, 1)--> (216,248,1) # y原来为(248,)--> (1,248,1)--> (216,248,1) # z原来为 (1, ) --> (1,1,1) --> (216,248,1) # 2.4.anchor各个维度堆叠组合,生成最终anchor(1,432,496,1,2,7) # 2.4.1.堆叠anchor的位置 # [x, y, z, 3]-->[216, 248, 1, 3] 代表了每个anchor的位置信息 # 其中3为该点所在映射tensor中的(z, y, x)数值 anchors = torch.stack((x_shifts, y_shifts, z_shifts), dim=-1) # 2.4.2.将anchor的位置和大小进行组合,编程为将anchor扩展并复制为相同维度(除了最后一维),然后进行组合 # (216, 248, 1, 3) --> (216, 248, 1 , 1, 3) # 维度分别代表了: z,y,x, 该类别anchor的尺度数量,该个anchor的位置信息 anchors = anchors[:, :, :, None, :].repeat(1, 1, 1, anchor_size.shape[0], 1) # (1, 1, 1, 1, 3) --> (216, 248, 1, 1, 3) anchor_size = anchor_size.view(1, 1, 1, -1, 3).repeat([*anchors.shape[0:3], 1, 1]) # anchors生成的最终结果需要有位置信息和大小信息 --> (216, 248, 1, 1, 6) # 最后一个纬度中表示(z, y, x, l, w, h) anchors = torch.cat((anchors, anchor_size), dim=-1) # 2.4.3.将anchor的位置和大小和旋转角进行组合 # 在倒数第二个维度上增加一个维度,然后复制该维度一次 # (216, 248, 1, 1, 2, 6) 长, 宽, 深, anchor尺度数量, 该尺度旋转角个数,anchor的6个参数 anchors = anchors[:, :, :, :, None, :].repeat(1, 1, 1, 1, num_anchor_rotation, 1) # (216, 248, 1, 1, 2, 1) 两个不同方向先验框的旋转角度 anchor_rotation = anchor_rotation.view(1, 1, 1, 1, -1, 1).repeat( [*anchors.shape[0:3], num_anchor_size, 1, 1]) # [z, y, x, num_size, num_rot, 7] --> (216, 248, 1, 1, 2, 7) # 最后一个纬度表示为anchors的位置+大小+旋转角度(z, y, x, l, w, h, theta) anchors = torch.cat((anchors, anchor_rotation), dim=-1) # [z, y, x, num_size, num_rot, 7] # 2.5 置换anchor的维度 # [z, y, x, num_anchor_size, num_rot, 7]-->[x, y, z, num_anchor_zie, num_rot, 7] # 最后一个纬度代表了 : [x, y, z, dx, dy, dz, rot] anchors = anchors.permute(2, 1, 0, 3, 4, 5).contiguous() # 使得各类anchor的z轴方向从anchor的底部移动到该anchor的中心点位置 # 车 : -1.78 + 1.56/2 = -1.0 # 人、自行车 : -0.6 + 1.73/2 = 0.23 anchors[..., 2] += anchors[..., 5] / 2 all_anchors.append(anchors) # all_anchors: [(1,248,216,1,2,7),(1,248,216,1,2,7),(1,248,216,1,2,7)] # num_anchors_per_location:[2,2,2] return all_anchors, num_anchors_per_location 7.2 预测头实现对特征图上的每个anchor预测对应的类别,方向和box的7个回归参数。 代码在:pcdet/models/dense_heads/anchor_head_single.py 注:SECOND的特征图尺度大小为 宽200, 长176,下面注释中需要将所有216替换为176,248替换为200即可。同时点云的尺度信息是[0, -40, -3, 70.4, 40, 1]。 class AnchorHeadSingle(AnchorHeadTemplate): """ Args: model_cfg: AnchorHeadSingle的配置 input_channels: 384 输入通道数 num_class: 3 class_names: ['Car','Pedestrian','Cyclist'] grid_size: (X, Y, Z) point_cloud_range: (0, -39.68, -3, 69.12, 39.68, 1) predict_boxes_when_training: False """ def __init__(self, model_cfg, input_channels, num_class, class_names, grid_size, point_cloud_range, predict_boxes_when_training=True, **kwargs): super().__init__( model_cfg=model_cfg, num_class=num_class, class_names=class_names, grid_size=grid_size, point_cloud_range=point_cloud_range, predict_boxes_when_training=predict_boxes_when_training ) # 每个点有3个尺度的个先验框 每个先验框都有两个方向(0度,90度) num_anchors_per_location:[2, 2, 2] self.num_anchors_per_location = sum(self.num_anchors_per_location) # sum([2, 2, 2]) # Conv2d(512,18,kernel_size=(1,1),stride=(1,1)) self.conv_cls = nn.Conv2d( input_channels, self.num_anchors_per_location * self.num_class, kernel_size=1 ) # Conv2d(512,42,kernel_size=(1,1),stride=(1,1)) self.conv_box = nn.Conv2d( input_channels, self.num_anchors_per_location * self.box_coder.code_size, kernel_size=1 ) # 如果存在方向损失,则添加方向卷积层Conv2d(512,12,kernel_size=(1,1),stride=(1,1)) if self.model_cfg.get('USE_DIRECTION_CLASSIFIER', None) is not None: self.conv_dir_cls = nn.Conv2d( input_channels, self.num_anchors_per_location * self.model_cfg.NUM_DIR_BINS, kernel_size=1 ) else: self.conv_dir_cls = None self.init_weights() # 初始化参数 def init_weights(self): pi = 0.01 # 初始化分类卷积偏置 nn.init.constant_(self.conv_cls.bias, -np.log((1 - pi) / pi)) # 初始化分类卷积权重 nn.init.normal_(self.conv_box.weight, mean=0, std=0.001) def forward(self, data_dict): # 从字典中取出经过backbone处理过的信息 # spatial_features_2d 维度 (batch_size, 384, 248, 216) spatial_features_2d = data_dict['spatial_features_2d'] # 每个坐标点上面6个先验框的类别预测 --> (batch_size, 18, 248, 216) cls_preds = self.conv_cls(spatial_features_2d) # 每个坐标点上面6个先验框的参数预测 --> (batch_size, 42, 248, 216) # 其中每个先验框需要预测7个参数,分别是(x, y, z, w, l, h, θ) box_preds = self.conv_box(spatial_features_2d) # 维度调整,将类别放置在最后一维度 [N, H, W, C] --> (batch_size, 248, 216, 18) cls_preds = cls_preds.permute(0, 2, 3, 1).contiguous() # 维度调整,将先验框调整参数放置在最后一维度 [N, H, W, C] --> (batch_size ,248, 216, 42) box_preds = box_preds.permute(0, 2, 3, 1).contiguous() # 将类别和先验框调整预测结果放入前向传播字典中 self.forward_ret_dict['cls_preds'] = cls_preds self.forward_ret_dict['box_preds'] = box_preds # 进行方向分类预测 if self.conv_dir_cls is not None: # # 每个先验框都要预测为两个方向中的其中一个方向 --> (batch_size, 12, 248, 216) dir_cls_preds = self.conv_dir_cls(spatial_features_2d) # 将类别和先验框方向预测结果放到最后一个维度中 [N, H, W, C] --> (batch_size, 248, 216, 12) dir_cls_preds = dir_cls_preds.permute(0, 2, 3, 1).contiguous() # 将方向预测结果放入前向传播字典中 self.forward_ret_dict['dir_cls_preds'] = dir_cls_preds else: dir_cls_preds = None """ 如果是在训练模式的时候,需要对每个先验框分配GT来计算loss """ if self.training: # targets_dict = { # 'box_cls_labels': cls_labels, # (4,211200) # 'box_reg_targets': bbox_targets, # (4,211200, 7) # 'reg_weights': reg_weights # (4,211200) # } targets_dict = self.assign_targets( gt_boxes=data_dict['gt_boxes'] # (4,39,8) ) # 将GT分配结果放入前向传播字典中 self.forward_ret_dict.update(targets_dict) # 如果不是训练模式,则直接生成进行box的预测 if not self.training or self.predict_boxes_when_training: # 根据预测结果解码生成最终结果 batch_cls_preds, batch_box_preds = self.generate_predicted_boxes( batch_size=data_dict['batch_size'], cls_preds=cls_preds, box_preds=box_preds, dir_cls_preds=dir_cls_preds ) data_dict['batch_cls_preds'] = batch_cls_preds # (1, 211200, 3) 70400*3=211200 data_dict['batch_box_preds'] = batch_box_preds # (1, 211200, 7) data_dict['cls_preds_normalized'] = False return data_dict至此,分别得到每个box的类别预测结果,方向分类结果,box回归结果 类别预测 shape :(batch_size, 200, 176, 18) 方向分类 shape :(batch_size, 200, 176, 12) box回归shape:(batch_size, 200, 176, 42) 8、Target assignment由于预测的时候,将不同类别的anchor堆叠在了一个点进行预测,所有进行Target assignment时候,要分类别进行Target assignment操作。这里与2D 的SSD或YOLO的匹配不同。 因此在匹配的时候,需要逐帧逐类别对生成的anchor进行匹配;其中函数assign_targets负责一帧的匹配,函数assign_targets_single负责一帧中单个类别的匹配 代码在:pcdet/models/dense_heads/target_assigner/axis_aligned_target_assigner.py 注:SECOND的特征图尺度大小为 宽200, 长176,下面注释中需要将所有216替换为176,248替换为200即可。同时点云的尺度信息是[0, -40, -3, 70.4, 40, 1]。 import numpy as np import torch from ....ops.iou3d_nms import iou3d_nms_utils from ....utils import box_utils class AxisAlignedTargetAssigner(object): def __init__(self, model_cfg, class_names, box_coder, match_height=False): super().__init__() # anchor生成配置参数 anchor_generator_cfg = model_cfg.ANCHOR_GENERATOR_CONFIG # 为预测box找对应anchor的参数 anchor_target_cfg = model_cfg.TARGET_ASSIGNER_CONFIG # 编码box的7个残差参数(x, y, z, w, l, h, θ) --> pcdet.utils.box_coder_utils.ResidualCoder self.box_coder = box_coder # 在PointPillars中指定正负样本的时候由BEV视角计算GT和先验框的iou,不需要进行z轴上的高度的匹配, # 想法是:1、点云中的物体都在同一个平面上,没有物体在Z轴发生重叠的情况 # 2、每个类别的高度相差不是很大,直接使用SmoothL1损失就可以达到很好的高度回归效果 self.match_height = match_height # 类别名称['Car', 'Pedestrian', 'Cyclist'] self.class_names = np.array(class_names) # ['Car', 'Pedestrian', 'Cyclist'] self.anchor_class_names = [config['class_name'] for config in anchor_generator_cfg] # anchor_target_cfg.POS_FRACTION = -1 < 0 --> None # 前景、背景采样系数 PointPillars、SECOND不考虑 self.pos_fraction = anchor_target_cfg.POS_FRACTION if anchor_target_cfg.POS_FRACTION >= 0 else None # 总采样数 PointPillars不考虑 self.sample_size = anchor_target_cfg.SAMPLE_SIZE # 512 # False 前景权重由 1/前景anchor数量 PointPillars不考虑 self.norm_by_num_examples = anchor_target_cfg.NORM_BY_NUM_EXAMPLES # 类别iou匹配为正样本阈值{'Car':0.6, 'Pedestrian':0.5, 'Cyclist':0.5} self.matched_thresholds = {} # 类别iou匹配为负样本阈值{'Car':0.45, 'Pedestrian':0.35, 'Cyclist':0.35} self.unmatched_thresholds = {} for config in anchor_generator_cfg: self.matched_thresholds[config['class_name']] = config['matched_threshold'] self.unmatched_thresholds[config['class_name']] = config['unmatched_threshold'] self.use_multihead = model_cfg.get('USE_MULTIHEAD', False) # False # self.separate_multihead = model_cfg.get('SEPARATE_MULTIHEAD', False) # if self.seperate_multihead: # rpn_head_cfgs = model_cfg.RPN_HEAD_CFGS # self.gt_remapping = {} # for rpn_head_cfg in rpn_head_cfgs: # for idx, name in enumerate(rpn_head_cfg['HEAD_CLS_NAME']): # self.gt_remapping[name] = idx + 1 def assign_targets(self, all_anchors, gt_boxes_with_classes): """ 处理一批数据中所有点云的anchors和gt_boxes, 计算每个anchor属于前景还是背景, 为每个前景的anchor分配类别和计算box的回归残差和回归权重 Args: all_anchors: [(N, 7), ...] gt_boxes_with_classes: (B, M, 8) # 最后维度数据为 (x, y, z, l, w, h, θ,class) Returns: all_targets_dict = { # 每个anchor的类别 'box_cls_labels': cls_labels, # (batch_size,num_of_anchors) # 每个anchor的回归残差 -->(∆x, ∆y, ∆z, ∆l, ∆w, ∆h, ∆θ) 'box_reg_targets': bbox_targets, # (batch_size,num_of_anchors,7) # 每个box的回归权重 'reg_weights': reg_weights # (batch_size,num_of_anchors) } """ # 1.初始化结果list并提取对应的gt_box和类别 bbox_targets = [] cls_labels = [] reg_weights = [] # 得到批大小 batch_size = gt_boxes_with_classes.shape[0] # 4 # 得到所有GT的类别 gt_classes = gt_boxes_with_classes[:, :, -1] # (4,num_of_gt) # 得到所有GT的7个box参数 gt_boxes = gt_boxes_with_classes[:, :, :-1] # (4,num_of_gt,7) # 2.对batch中的所有数据逐帧匹配anchor的前景和背景 for k in range(batch_size): cur_gt = gt_boxes[k] # 取出当前帧中的 gt_boxes (num_of_gt,7) """ 由于在OpenPCDet的数据预处理时,以一批数据中拥有GT数量最多的帧为基准, 其他帧中GT数量不足,则会进行补0操作,使其成为一个矩阵,例: [ [1,1,2,2,3,2], [2,2,3,1,0,0], [3,1,2,0,0,0] ] 因此这里从每一行的倒数第二个类别开始判断, 截取最后一个非零元素的索引,来取出当前帧中真实的GT数据 """ cnt = cur_gt.__len__() - 1 # 得到一批数据中最多有多少个GT # 这里的循环是找到最后一个非零的box,因为预处理的时候会按照batch最大box的数量处理,不足的进行补0 while cnt > 0 and cur_gt[cnt].sum() == 0: cnt -= 1 # 2.1提取当前帧非零的box和类别 cur_gt = cur_gt[:cnt + 1] # cur_gt_classes 例: tensor([1, 1, 2, 2, 2, 2, 2, 3, 3, 3, 3], device='cuda:0', dtype=torch.int32) cur_gt_classes = gt_classes[k][:cnt + 1].int() target_list = [] # 2.2 对每帧中的anchor和GT分类别,单独计算前背景 # 计算时候 每个类别的anchor是独立计算的 for anchor_class_name, anchors in zip(self.anchor_class_names, all_anchors): # anchor_class_name : 车 | 行人 | 自行车 # anchors : (1, 200, 176, 1, 2, 7) 7 --> (x, y, z, l, w, h, θ) if cur_gt_classes.shape[0] > 1: # self.class_names : ["car", "person", "cyclist"] # 这里减1是因为列表索引从0开始,目的是得到属于列表中gt中哪些类别是与当前处理的了类别相同,得到类别mask mask = torch.from_numpy(self.class_names[cur_gt_classes.cpu() - 1] == anchor_class_name) else: mask = torch.tensor([self.class_names[c - 1] == anchor_class_name for c in cur_gt_classes], dtype=torch.bool) # 在检测头中是否使用多头,是的话 此处为True,默认为False if self.use_multihead: # False anchors = anchors.permute(3, 4, 0, 1, 2, 5).contiguous().view(-1, anchors.shape[-1]) # if self.seperate_multihead: # selected_classes = cur_gt_classes[mask].clone() # if len(selected_classes) > 0: # new_cls_id = self.gt_remapping[anchor_class_name] # selected_classes[:] = new_cls_id # else: # selected_classes = cur_gt_classes[mask] selected_classes = cur_gt_classes[mask] else: # 2.2.1 计算所需的变量 得到特征图的大小 feature_map_size = anchors.shape[:3] # (1, 248, 216) # 将所有的anchors展平 shape : (216, 248, 1, 1, 2, 7) --> (107136, 7) anchors = anchors.view(-1, anchors.shape[-1]) # List: 根据累呗mask索引得到该帧中当前需要处理的类别 --> 车 | 行人 | 自行车 selected_classes = cur_gt_classes[mask] # 2.2.2 使用assign_targets_single来单独为某一类别的anchors分配gt_boxes, # 并为前景、背景的box设置编码和回归权重 single_target = self.assign_targets_single( anchors, # 该类的所有anchor cur_gt[mask], # GT_box shape : (num_of_GT_box, 7) gt_classes=selected_classes, # 当前选中的类别 matched_threshold=self.matched_thresholds[anchor_class_name], # 当前类别anchor与GT匹配为正样本的阈值 unmatched_threshold=self.unmatched_thresholds[anchor_class_name] # 当前类别anchor与GT匹配为负样本的阈值 ) target_list.append(single_target) # 到目前为止,处理完该帧单个类别和该类别anchor的前景和背景分配 if self.use_multihead: target_dict = { 'box_cls_labels': [t['box_cls_labels'].view(-1) for t in target_list], 'box_reg_targets': [t['box_reg_targets'].view(-1, self.box_coder.code_size) for t in target_list], 'reg_weights': [t['reg_weights'].view(-1) for t in target_list] } target_dict['box_reg_targets'] = torch.cat(target_dict['box_reg_targets'], dim=0) target_dict['box_cls_labels'] = torch.cat(target_dict['box_cls_labels'], dim=0).view(-1) target_dict['reg_weights'] = torch.cat(target_dict['reg_weights'], dim=0).view(-1) else: target_dict = { # feature_map_size:(1,200,176, 2) 'box_cls_labels': [t['box_cls_labels'].view(*feature_map_size, -1) for t in target_list], # (1,248,216, 2, 7) 'box_reg_targets': [t['box_reg_targets'].view(*feature_map_size, -1, self.box_coder.code_size) for t in target_list], # (1,248,216, 2) 'reg_weights': [t['reg_weights'].view(*feature_map_size, -1) for t in target_list] } # list : 3*anchor (1, 248, 216, 2, 7) --> (1, 248, 216, 6, 7) -> (321408, 7) target_dict['box_reg_targets'] = torch.cat( target_dict['box_reg_targets'], dim=-2 ).view(-1, self.box_coder.code_size) # list:3 (1, 248, 216, 2) --> (1,248, 216, 6) -> (1*248*216*6, ) target_dict['box_cls_labels'] = torch.cat(target_dict['box_cls_labels'], dim=-1).view(-1) # list:3 (1, 200, 176, 2) --> (1, 200, 176, 6) -> (1*248*216*6, ) target_dict['reg_weights'] = torch.cat(target_dict['reg_weights'], dim=-1).view(-1) # 将结果填入对应的容器 bbox_targets.append(target_dict['box_reg_targets']) cls_labels.append(target_dict['box_cls_labels']) reg_weights.append(target_dict['reg_weights']) # 到这里该batch的点云全部处理完 # 3.将结果stack并返回 bbox_targets = torch.stack(bbox_targets, dim=0) # (batch_size,321408,7) cls_labels = torch.stack(cls_labels, dim=0) # (batch_size,321408) reg_weights = torch.stack(reg_weights, dim=0) # (batch_size,321408) all_targets_dict = { 'box_cls_labels': cls_labels, # (batch_size,321408) 'box_reg_targets': bbox_targets, # (batch_size,321408,7) 'reg_weights': reg_weights # (batch_size,321408) } return all_targets_dict def assign_targets_single(self, anchors, gt_boxes, gt_classes, matched_threshold=0.6, unmatched_threshold=0.45): """ 针对某一类别的anchors和gt_boxes,计算前景和背景anchor的类别,box编码和回归权重 Args: anchors: (107136, 7) gt_boxes: (该帧中该类别的GT数量,7) gt_classes: (该帧中该类别的GT数量, 1) matched_threshold: 0.6 unmatched_threshold: 0.45 Returns: 前景anchor ret_dict = { 'box_cls_labels': labels, # (107136,) 'box_reg_targets': bbox_targets, # (107136,7) 'reg_weights': reg_weights, # (107136,) } """ # ----------------------------1.初始化-------------------------------# num_anchors = anchors.shape[0] # 216 * 248 = 107136 num_gt = gt_boxes.shape[0] # 该帧中该类别的GT数量 # 初始化anchor对应的label和gt_id ,并置为 -1,-1表示loss计算时候不会被考虑,背景的类别被设置为0 labels = torch.ones((num_anchors,), dtype=torch.int32, device=anchors.device) * -1 gt_ids = torch.ones((num_anchors,), dtype=torch.int32, device=anchors.device) * -1 # ---------------------2.计算该类别中anchor的前景和背景------------------------# if len(gt_boxes) > 0 and anchors.shape[0] > 0: # 1.计算该帧中某一个类别gt和对应anchors之间的iou(jaccard index) # anchor_by_gt_overlap shape : (107136, num_gt) # anchor_by_gt_overlap代表当前类别的所有anchor和当前类别中所有GT的iou anchor_by_gt_overlap = iou3d_nms_utils.boxes_iou3d_gpu(anchors[:, 0:7], gt_boxes[:, 0:7]) \ if self.match_height else box_utils.boxes3d_nearest_bev_iou(anchors[:, 0:7], gt_boxes[:, 0:7]) # NOTE: The speed of these two versions depends the environment and the number of anchors # anchor_to_gt_argmax = torch.from_numpy(anchor_by_gt_overlap.cpu().numpy().argmax(axis=1)).cuda() # 2.得到每一个anchor与哪个的GT的的iou最大 # anchor_to_gt_argmax表示数据维度是anchor的长度,索引是gt anchor_to_gt_argmax = anchor_by_gt_overlap.argmax(dim=1) # anchor_to_gt_max得到每一个anchor最匹配的gt的iou数值 anchor_to_gt_max = anchor_by_gt_overlap[ torch.arange(num_anchors, device=anchors.device), anchor_to_gt_argmax] # gt_to_anchor_argmax = torch.from_numpy(anchor_by_gt_overlap.cpu().numpy().argmax(axis=0)).cuda() # 3.找到每个gt最匹配anchor的索引和iou # (num_of_gt,) 得到每个gt最匹配的anchor索引 gt_to_anchor_argmax = anchor_by_gt_overlap.argmax(dim=0) # (num_of_gt,)找到每个gt最匹配anchor的iou数值 gt_to_anchor_max = anchor_by_gt_overlap[gt_to_anchor_argmax, torch.arange(num_gt, device=anchors.device)] # 4.将GT中没有匹配到的anchor的iou数值设置为-1 empty_gt_mask = gt_to_anchor_max == 0 # 得到没有匹配到anchor的gt的mask gt_to_anchor_max[empty_gt_mask] = -1 # 将没有匹配到anchor的gt的iou数值设置为-1 # 5.找到anchor中和gt存在最大iou的anchor索引,即前景anchor """ 由于在前面的实现中,仅仅找出来每个GT和anchor的最大iou索引,但是argmax返回的是索引最小的那个, 在匹配的过程中可能一个GT和多个anchor拥有相同的iou大小, 所以此处要找出这个GT与所有anchors拥有相同最大iou的anchor """ # 以gt为基础,逐个anchor对应,比如第一个gt的最大iou为0.9,则在所有anchor中找iou为0.9的anchor # nonzero函数是numpy中用于得到数组array中非零元素的位置(数组索引)的函数 """ 矩阵比较例子 : anchors_with_max_overlap = torch.tensor([[0.78, 0.1, 0.9, 0], [0.0, 0.5, 0, 0], [0.0, 0, 0.9, 0.8], [0.78, 0.1, 0.0, 0]]) gt_to_anchor_max = torch.tensor([0.78, 0.5, 0.9,0.8]) anchors_with_max_overlap = anchor_by_gt_overlap == gt_to_anchor_max # 返回的结果中包含了在anchor中与该GT拥有相同最大iou的所有anchor anchors_with_max_overlap = tensor([[ True, False, True, False], [False, True, False, False], [False, False, True, True], [ True, False, False, False]]) 在torch中nonzero返回的是tensor中非0元素的位置,此函数在numpy中返回的是非零元素的行列表和列列表。 torch返回结果tensor([[0, 0], [0, 2], [1, 1], [2, 2], [2, 3], [3, 0]]) numpy返回结果(array([0, 0, 1, 2, 2, 3]), array([0, 2, 1, 2, 3, 0])) 所以可以得到第一个GT同时与第一个anchor和最后一个anchor最为匹配 """ """所以在实际的一批数据中可以到得到结果为 tensor([[33382, 9], [43852, 10], [47284, 5], [50370, 4], [58498, 8], [58500, 8], [58502, 8], [59139, 2], [60751, 1], [61183, 1], [61420, 11], [62389, 0], [63216, 13], [63218, 13], [65046, 12], [65048, 12], [65478, 12], [65480, 12], [71924, 3], [78046, 7], [80150, 6]], device='cuda:0') 在第1维度拥有相同gt索引的项,在该类所有anchor中同时拥有多个与之最为匹配的anchor """ # (num_of_multiple_best_matching_for_per_GT,) anchors_with_max_overlap = (anchor_by_gt_overlap == gt_to_anchor_max).nonzero()[:, 0] # 得到这些最匹配anchor与该类别的哪个GT索引相对应 # 其实和(anchor_by_gt_overlap == gt_to_anchor_max).nonzero()[:, 1]的结果一样 gt_inds_force = anchor_to_gt_argmax[anchors_with_max_overlap] # (35,) # 将gt的类别赋值到对应的anchor的label中 labels[anchors_with_max_overlap] = gt_classes[gt_inds_force] # 将gt的索引也赋值到对应的anchors的gt_ids中 gt_ids[anchors_with_max_overlap] = gt_inds_force.int() # 6.根据matched_threshold和unmatched_threshold以及anchor_to_gt_max计算前景和背景索引,并更新labels和gt_ids """这里对labels和gt_ids的操作应该已经包含了上面的anchors_with_max_overlap""" # 找到最匹配的anchor中iou大于给定阈值的mask #(107136,) pos_inds = anchor_to_gt_max >= matched_threshold # 找到最匹配的anchor中iou大于给定阈值的gt的索引 #(105,) gt_inds_over_thresh = anchor_to_gt_argmax[pos_inds] # 将pos anchor对应gt的类别赋值到对应的anchor的label中 labels[pos_inds] = gt_classes[gt_inds_over_thresh] # 将pos anchor对应gt的索引赋值到对应的anchor的gt_id中 gt_ids[pos_inds] = gt_inds_over_thresh.int() bg_inds = (anchor_to_gt_max < unmatched_threshold).nonzero()[:, 0] # 找到背景anchor索引 else: bg_inds = torch.arange(num_anchors, device=anchors.device) # 找到前景anchor的索引--> (num_of_foreground_anchor,) # 106879 + 119 = 106998 < 107136 说明有一些anchor既不是背景也不是前景, # iou介于unmatched_threshold和matched_threshold之间 fg_inds = (labels > 0).nonzero()[:, 0] # 到目前为止得到哪些anchor是前景和哪些anchor是背景 # ------------------3.对anchor的前景和背景进行筛选和赋值--------------------# # 如果存在前景采样比例,则分别采样前景和背景anchor,PointPillar中没有前背景采样操作,前背景均衡使用了focal loss损失函数 if self.pos_fraction is not None: # anchor_target_cfg.POS_FRACTION = -1 < 0 --> None num_fg = int(self.pos_fraction * self.sample_size) # self.sample_size=512 # 如果前景anchor大于采样前景数 if len(fg_inds) > num_fg: # 计算要丢弃的前景anchor数目 num_disabled = len(fg_inds) - num_fg # 在前景数目中随机产生索引值,并取前num_disabled个关闭索引 # 比如:torch.randperm(4) # 输出:tensor([ 2, 1, 0, 3]) disable_inds = torch.randperm(len(fg_inds))[:num_disabled] # 将被丢弃的anchor的iou设置为-1 labels[disable_inds] = -1 # 更新前景索引 fg_inds = (labels > 0).nonzero()[:, 0] # 计算所需背景数 num_bg = self.sample_size - (labels > 0).sum() # 如果当前背景数大于所需背景数 if len(bg_inds) > num_bg: # torch.randint在0到len(bg_inds)之间,随机产生size为(num_bg,)的数组 enable_inds = bg_inds[torch.randint(0, len(bg_inds), size=(num_bg,))] # 将enable_inds的标签设置为0 labels[enable_inds] = 0 # bg_inds = torch.nonzero(labels == 0)[:, 0] else: # 如果该类别没有GT的话,将该类别的全部label置0,即所有anchor都是背景类别 if len(gt_boxes) == 0 or anchors.shape[0] == 0: labels[:] = 0 else: # anchor与GT的iou小于unmatched_threshold的anchor的类别设置类背景类别 labels[bg_inds] = 0 # 将前景赋对应类别 """ 此处分别使用了anchors_with_max_overlap和 anchor_to_gt_max >= matched_threshold来对该类别的anchor进行赋值 但是我个人觉得anchor_to_gt_max >= matched_threshold已经包含了anchors_with_max_overlap的那些与GT拥有最大iou的 anchor了,所以我对这里的计算方式有一点好奇,为什么要分别计算两次, 如果知道这里原因的小伙伴希望可以给予解答,谢谢! """ labels[anchors_with_max_overlap] = gt_classes[gt_inds_force] # ------------------4.计算bbox_targets和reg_weights--------------------# # 初始化每个anchor的7个回归参数,并设置为0数值 bbox_targets = anchors.new_zeros((num_anchors, self.box_coder.code_size)) # (107136,7) # 如果该帧中有该类别的GT时候,就需要对这些设置为正样本类别的anchor进行编码操作了 if len(gt_boxes) > 0 and anchors.shape[0] > 0: # 使用anchor_to_gt_argmax[fg_inds]来重复索引每个anchor对应前景的GT_box fg_gt_boxes = gt_boxes[anchor_to_gt_argmax[fg_inds], :] # 提取所有属于前景的anchor fg_anchors = anchors[fg_inds, :] """ PointPillar编码gt和前景anchor,并赋值到bbox_targets的对应位置 7个参数的编码的方式为 ∆x = (x^gt − xa^da)/d^a , ∆y = (y^gt − ya^da)/d^a , ∆z = (z^gt − za^ha)/h^a ∆w = log (w^gt / w^a) ∆l = log (l^gt / l^a) , ∆h = log (h^gt / h^a) ∆θ = sin(θ^gt - θ^a) """ bbox_targets[fg_inds, :] = self.box_coder.encode_torch(fg_gt_boxes, fg_anchors) # 初始化回归权重,并设置值为0 reg_weights = anchors.new_zeros((num_anchors,)) # (107136,) if self.norm_by_num_examples: # PointPillars回归权重中不需要norm_by_num_examples num_examples = (labels >= 0).sum() num_examples = num_examples if num_examples > 1.0 else 1.0 reg_weights[labels > 0] = 1.0 / num_examples else: reg_weights[labels > 0] = 1.0 # 将前景anchor的回归权重设置为1 ret_dict = { 'box_cls_labels': labels, # (107136,) 'box_reg_targets': bbox_targets, # (107136,7)编码后的结果 'reg_weights': reg_weights, # (107136,) } return ret_dict此处根据论文中的公式对匹配被正样本的anchor_box和与之对应的GT-box的7个回归参数进行编码,公式如下:

x^gt代表了标注框的x长度 ;x^a代表了先验框的长度信息,d^a表示先验框长度和宽度的对角线距离,定义为: 代码在:pcdet/utils/box_coder_utils.py class ResidualCoder(object): def __init__(self, code_size=7, encode_angle_by_sincos=False, **kwargs): """ loss中anchor和gt的编码与解码 7个参数的编码的方式为 ∆x = (x^gt − xa^da)/d^a , ∆y = (y^gt − ya^da)/d^a , ∆z = (z^gt − za^ha)/h^a ∆w = log (w^gt / w^a) ∆l = log (l^gt / l^a) , ∆h = log (h^gt / h^a) ∆θ = sin(θ^gt - θ^a) """ super().__init__() self.code_size = code_size self.encode_angle_by_sincos = encode_angle_by_sincos if self.encode_angle_by_sincos: self.code_size += 1 def encode_torch(self, boxes, anchors): """ Args: boxes: (N, 7 + C) [x, y, z, dx, dy, dz, heading, ...] anchors: (N, 7 + C) [x, y, z, dx, dy, dz, heading or *[cos, sin], ...] Returns: """ # 截断anchors的[dx,dy,dz],每个anchor_box的l, w, h数值如果小于1e-5则为1e-5 anchors[:, 3:6] = torch.clamp_min(anchors[:, 3:6], min=1e-5) # 截断boxes的[dx,dy,dz] 每个GT_box的l, w, h数值如果小于1e-5则为1e-5 boxes[:, 3:6] = torch.clamp_min(boxes[:, 3:6], min=1e-5) # If split_size_or_sections is an integer type, then tensor will be split into equally sized chunks (if possible). # Last chunk will be smaller if the tensor size along the given dimension dim is not divisible by split_size. # 这里指torch.split的第二个参数 torch.split(tensor, split_size, dim=) split_size是切分后每块的大小,不是切分为多少块!,多余的参数使用*cags接收 xa, ya, za, dxa, dya, dza, ra, *cas = torch.split(anchors, 1, dim=-1) xg, yg, zg, dxg, dyg, dzg, rg, *cgs = torch.split(boxes, 1, dim=-1) # 计算anchor对角线长度 diagonal = torch.sqrt(dxa ** 2 + dya ** 2) # 计算loss的公式,Δx,Δy,Δz,Δw,Δl,Δh,Δθ # ∆x = (x^gt − xa^da)/diagonal xt = (xg - xa) / diagonal # ∆y = (y^gt − ya^da)/diagonal yt = (yg - ya) / diagonal # ∆z = (z^gt − za^ha)/h^a zt = (zg - za) / dza # ∆l = log(l ^ gt / l ^ a) dxt = torch.log(dxg / dxa) # ∆w = log(w ^ gt / w ^ a) dyt = torch.log(dyg / dya) # ∆h = log(h ^ gt / h ^ a) dzt = torch.log(dzg / dza) # False if self.encode_angle_by_sincos: rt_cos = torch.cos(rg) - torch.cos(ra) rt_sin = torch.sin(rg) - torch.sin(ra) rts = [rt_cos, rt_sin] else: rts = [rg - ra] # Δθ cts = [g - a for g, a in zip(cgs, cas)] return torch.cat([xt, yt, zt, dxt, dyt, dzt, *rts, *cts], dim=-1) 9、loss计算总的loss计算公式为: 其中总beta1,beta2,beta3分别为1.0,2.0,0.2,分别用于控制类别分类损失,anchor的回归损失,方向分类损失。reg-θ为角度回归,reg-other为位置和尺度回归。 注:使用较小的方向回归损失,可以防止网络在物体的方向分类上摇摆不定。 9.1、分类loss计算由于每个类别都会在特征图上面产生H*W*num_rou*num_scale个anchor,然而实际中一个类别可能不会出现在这帧点云中,或者仅有几个anchor配匹配为正样本,这就导致了类别的极度不平衡;因此作者引入了RetinaNet中的focal loss来缓解类别不均衡的情况和正负样本的权重。 其中,aplha参数和gamma参数都和RetinaNet中的设置一样,分别为0.25和2。 代码在:pcdet/models/dense_heads/anchor_head_template.py def get_cls_layer_loss(self): # (batch_size, 248, 216, 18) 网络类别预测 cls_preds = self.forward_ret_dict['cls_preds'] # (batch_size, 321408) 前景anchor类别 box_cls_labels = self.forward_ret_dict['box_cls_labels'] batch_size = int(cls_preds.shape[0]) # [batch_szie, num_anchors]--> (batch_size, 321408) # 关心的anchor 选取出前景背景anchor, 在0.45到0.6之间的设置为仍然是-1,不参与loss计算 cared = box_cls_labels >= 0 # (batch_size, 321408) 前景anchor positives = box_cls_labels > 0 # (batch_size, 321408) 背景anchor negatives = box_cls_labels == 0 # 背景anchor赋予权重 negative_cls_weights = negatives * 1.0 # 将每个anchor分类的损失权重都设置为1 cls_weights = (negative_cls_weights + 1.0 * positives).float() # 每个正样本anchor的回归损失权重,设置为1 reg_weights = positives.float() # 如果只有一类 if self.num_class == 1: # class agnostic box_cls_labels[positives] = 1 # 正则化并计算权重 求出每个数据中有多少个正例,即shape=(batch, 1) pos_normalizer = positives.sum(1, keepdim=True).float() # (4,1) 所有正例的和 eg:[[162.],[166.],[155.],[108.]] # 正则化回归损失-->(batch_size, 321408),最小值为1,根据论文中所述,用正样本数量来正则化回归损失 reg_weights /= torch.clamp(pos_normalizer, min=1.0) # 正则化分类损失-->(batch_size, 321408),根据论文中所述,用正样本数量来正则化分类损失 cls_weights /= torch.clamp(pos_normalizer, min=1.0) # care包含了背景和前景的anchor,但是这里只需要得到前景部分的类别即可不关注-1和0 # cared.type_as(box_cls_labels) 将cared中为False的那部分不需要计算loss的anchor变成了0 # 对应位置相乘后,所有背景和iou介于match_threshold和unmatch_threshold之间的anchor都设置为0 cls_targets = box_cls_labels * cared.type_as(box_cls_labels) # 在最后一个维度扩展一次 cls_targets = cls_targets.unsqueeze(dim=-1) cls_targets = cls_targets.squeeze(dim=-1) one_hot_targets = torch.zeros( *list(cls_targets.shape), self.num_class + 1, dtype=cls_preds.dtype, device=cls_targets.device ) # (batch_size, 321408, 4),这里的类别数+1是考虑背景 # target.scatter(dim, index, src) # scatter_函数的一个典型应用就是在分类问题中, # 将目标标签转换为one-hot编码形式 https://blog.csdn.net/guofei_fly/article/details/104308528 # 这里表示在最后一个维度,将cls_targets.unsqueeze(dim=-1)所索引的位置设置为1 """ dim=1: 表示按照列进行填充 index=batch_data.label:表示把batch_data.label里面的元素值作为下标, 去下标对应位置(这里的"对应位置"解释为列,如果dim=0,那就解释为行)进行填充 src=1:表示填充的元素值为1 """ # (batch_size, 321408, 4) one_hot_targets.scatter_(-1, cls_targets.unsqueeze(dim=-1).long(), 1.0) # (batch_size, 248, 216, 18) --> (batch_size, 321408, 3) cls_preds = cls_preds.view(batch_size, -1, self.num_class) # (batch_size, 321408, 3) 不计算背景分类损失 one_hot_targets = one_hot_targets[..., 1:] # 计算分类损失 # [N, M] # (batch_size, 321408, 3) cls_loss_src = self.cls_loss_func(cls_preds, one_hot_targets, weights=cls_weights) # 求和并除以batch数目 cls_loss = cls_loss_src.sum() / batch_size # loss乘以分类权重 --> cls_weight=1.0 cls_loss = cls_loss * self.model_cfg.LOSS_CONFIG.LOSS_WEIGHTS['cls_weight'] tb_dict = { 'rpn_loss_cls': cls_loss.item() } return cls_loss, tb_dictfocal loss代码在:pcdet/utils/loss_utils.py class SigmoidFocalClassificationLoss(nn.Module): """ 多分类 Sigmoid focal cross entropy loss. """ def __init__(self, gamma: float = 2.0, alpha: float = 0.25): """ Args: gamma: Weighting parameter to balance loss for hard and easy examples. alpha: Weighting parameter to balance loss for positive and negative examples. """ super(SigmoidFocalClassificationLoss, self).__init__() self.alpha = alpha # 0.25 self.gamma = gamma # 2.0 @staticmethod def sigmoid_cross_entropy_with_logits(input: torch.Tensor, target: torch.Tensor): """ PyTorch Implementation for tf.nn.sigmoid_cross_entropy_with_logits: max(x, 0) - x * z + log(1 + exp(-abs(x))) in https://www.tensorflow.org/api_docs/python/tf/nn/sigmoid_cross_entropy_with_logits Args: input: (B, #anchors, #classes) float tensor. Predicted logits for each class target: (B, #anchors, #classes) float tensor. One-hot encoded classification targets Returns: loss: (B, #anchors, #classes) float tensor. Sigmoid cross entropy loss without reduction """ loss = torch.clamp(input, min=0) - input * target + \ torch.log1p(torch.exp(-torch.abs(input))) return loss def forward(self, input: torch.Tensor, target: torch.Tensor, weights: torch.Tensor): """ Args: input: (B, #anchors, #classes) float tensor. eg:(4, 321408, 3) Predicted logits for each class :一个anchor会预测三种类别 target: (B, #anchors, #classes) float tensor. eg:(4, 321408, 3) One-hot encoded classification targets,:真值 weights: (B, #anchors) float tensor. eg:(4, 321408) Anchor-wise weights. Returns: weighted_loss: (B, #anchors, #classes) float tensor after weighting. """ pred_sigmoid = torch.sigmoid(input) # (batch_size, 321408, 3) f(x) = 1 / (1 + e^(-x)) # 这里的加权主要是解决正负样本不均衡的问题:正样本的权重为0.25,负样本的权重为0.75 # 交叉熵来自KL散度,衡量两个分布之间的相似性,针对二分类问题: # 合并形式: L = -(y * log(y^) + (1 - y) * log(1 - y^)) # 分段形式:y = 1, L = -y * log(y^); y = 0, L = -(1 - y) * log(1 - y^) # 这两种形式等价,只要是0和1的分类问题均可以写成两种等价形式,针对focal loss做类似处理 # 相对熵 = 信息熵 + 交叉熵, 且交叉熵是凸函数,求导时能够得到全局最优值-->(sigma(s)- y)x # https://zhuanlan.zhihu.com/p/35709485 alpha_weight = target * self.alpha + (1 - target) * (1 - self.alpha) # (4, 321408, 3) pt = target * (1.0 - pred_sigmoid) + (1.0 - target) * pred_sigmoid focal_weight = alpha_weight * torch.pow(pt, self.gamma) # (batch_size, 321408, 3) 交叉熵损失的一种变形,具体推到参考上面的链接 bce_loss = self.sigmoid_cross_entropy_with_logits(input, target) loss = focal_weight * bce_loss # (batch_size, 321408, 3) if weights.shape.__len__() == 2 or \ (weights.shape.__len__() == 1 and target.shape.__len__() == 2): weights = weights.unsqueeze(-1) assert weights.shape.__len__() == loss.shape.__len__() # weights参数使用正anchor数目进行平均,使得每个样本的损失与样本中目标的数量无关 return loss * weights 9.2、回归loss计算SECOND的回归loss计算与VoxelNet相近,其中作者改进了VoxelNet会因为方向角预测相反而产生大loss的情况。

θp为预测的方向角, θt为真实的方向角,当θp和θt预测相差180度时,损失照样趋近于0,同时加入了对方向的分类来解决出现预测和真实反向的情况;在direction classifer分支对每个anchor都预测了one-hot 向量用来判定角度。其中该分类问题定义为,theta>0则为正,theta 0 # 设置回归参数为1. [True, False] * 1. = [1., 0.] reg_weights = positives.float() # (4, 211200) 只保留标签>0的值 # 同cls处理 pos_normalizer = positives.sum(1, keepdim=True).float() # (batch_size, 1) 所有正例的和 eg:[[162.],[166.],[155.],[108.]] reg_weights /= torch.clamp(pos_normalizer, min=1.0) # (batch_size, 321408) if isinstance(self.anchors, list): if self.use_multihead: anchors = torch.cat( [anchor.permute(3, 4, 0, 1, 2, 5).contiguous().view(-1, anchor.shape[-1]) for anchor in self.anchors], dim=0) else: anchors = torch.cat(self.anchors, dim=-3) # (1, 248, 216, 3, 2, 7) else: anchors = self.anchors # (1, 248*216, 7) --> (batch_size, 248*216, 7) anchors = anchors.view(1, -1, anchors.shape[-1]).repeat(batch_size, 1, 1) # (batch_size, 248*216, 7) box_preds = box_preds.view(batch_size, -1, box_preds.shape[-1] // self.num_anchors_per_location if not self.use_multihead else box_preds.shape[-1]) # sin(a - b) = sinacosb-cosasinb # (batch_size, 321408, 7) 分别得到sinacosb和cosasinb box_preds_sin, reg_targets_sin = self.add_sin_difference(box_preds, box_reg_targets) loc_loss_src = self.reg_loss_func(box_preds_sin, reg_targets_sin, weights=reg_weights) loc_loss = loc_loss_src.sum() / batch_size loc_loss = loc_loss * self.model_cfg.LOSS_CONFIG.LOSS_WEIGHTS['loc_weight'] # loc_weight = 2.0 损失乘以回归权重 box_loss = loc_loss tb_dict = { # pytorch中的item()方法,返回张量中的元素值,与python中针对dict的item方法不同 'rpn_loss_loc': loc_loss.item() } # 如果存在方向预测,则添加方向损失 if box_dir_cls_preds is not None: # (batch_size, 321408, 2) 此处生成每个anchor的方向分类 dir_targets = self.get_direction_target( anchors, box_reg_targets, dir_offset=self.model_cfg.DIR_OFFSET, # 方向偏移量 0.78539 = π/4 num_bins=self.model_cfg.NUM_DIR_BINS # BINS的方向数 = 2 ) # 方向预测值 (batch_size, 321408, 2) dir_logits = box_dir_cls_preds.view(batch_size, -1, self.model_cfg.NUM_DIR_BINS) # 只要正样本的方向预测值 (batch_size, 321408) weights = positives.type_as(dir_logits) # (4, 211200) 除正例数量,使得每个样本的损失与样本中目标的数量无关 weights /= torch.clamp(weights.sum(-1, keepdim=True), min=1.0) # 方向损失计算 dir_loss = self.dir_loss_func(dir_logits, dir_targets, weights=weights) dir_loss = dir_loss.sum() / batch_size # 损失权重,dir_weight: 0.2 dir_loss = dir_loss * self.model_cfg.LOSS_CONFIG.LOSS_WEIGHTS['dir_weight'] # 将方向损失加入box损失 box_loss += dir_loss tb_dict['rpn_loss_dir'] = dir_loss.item() return box_loss, tb_dict add_sin_difference函数 def add_sin_difference(boxes1, boxes2, dim=6): # 针对角度添加sin损失,有效防止-pi和pi方向相反时损失过大 assert dim != -1 # 角度=180°×弧度÷π 弧度=角度×π÷180° # (batch_size, 321408, 1) torch.sin() - torch.cos() 的 input (Tensor) 都是弧度制数据,不是角度制数据。 rad_pred_encoding = torch.sin(boxes1[..., dim:dim + 1]) * torch.cos(boxes2[..., dim:dim + 1]) # (batch_size, 321408, 1) rad_tg_encoding = torch.cos(boxes1[..., dim:dim + 1]) * torch.sin(boxes2[..., dim:dim + 1]) # (batch_size, 321408, 7) 将编码后的结果放回 boxes1 = torch.cat([boxes1[..., :dim], rad_pred_encoding, boxes1[..., dim + 1:]], dim=-1) # (batch_size, 321408, 7) 将编码后的结果放回 boxes2 = torch.cat([boxes2[..., :dim], rad_tg_encoding, boxes2[..., dim + 1:]], dim=-1) return boxes1, boxes2 WeightsSmoothL1损失函数 代码在:pcdet/utils/loss_utils.py class WeightedSmoothL1Loss(nn.Module): """ Code-wise Weighted Smooth L1 Loss modified based on fvcore.nn.smooth_l1_loss https://github.com/facebookresearch/fvcore/blob/master/fvcore/nn/smooth_l1_loss.py | 0.5 * x ** 2 / beta if abs(x) < beta smoothl1(x) = | | abs(x) - 0.5 * beta otherwise, where x = input - target. """ def __init__(self, beta: float = 1.0 / 9.0, code_weights: list = None): """ Args: beta: Scalar float. L1 to L2 change point. For beta values < 1e-5, L1 loss is computed. code_weights: (#codes) float list if not None. Code-wise weights. """ super(WeightedSmoothL1Loss, self).__init__() self.beta = beta # 默认值1/9=0.111 if code_weights is not None: self.code_weights = np.array(code_weights, dtype=np.float32) # [1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0] self.code_weights = torch.from_numpy(self.code_weights).cuda() # 将权重放到GPU上 @staticmethod def smooth_l1_loss(diff, beta): # 如果beta非常小,则直接用abs计算,否则按照正常的Smooth L1 Loss计算 if beta < 1e-5: loss = torch.abs(diff) else: n = torch.abs(diff) # (batch_size, 321408, 7) # smoothL1公式,如上面所示 --> (batch_size, 321408, 7) loss = torch.where(n < beta, 0.5 * n ** 2 / beta, n - 0.5 * beta) return loss def forward(self, input: torch.Tensor, target: torch.Tensor, weights: torch.Tensor = None): """ Args: input: (B, #anchors, #codes) float tensor. Ecoded predicted locations of objects. target: (B, #anchors, #codes) float tensor. Regression targets. weights: (B, #anchors) float tensor if not None. Returns: loss: (B, #anchors) float tensor. Weighted smooth l1 loss without reduction. """ # 如果target为nan,则等于input,否则等于target target = torch.where(torch.isnan(target), input, target) # ignore nan targets# (batch_size, 321408, 7) diff = input - target # (batch_size, 321408, 7) # code-wise weighting if self.code_weights is not None: diff = diff * self.code_weights.view(1, 1, -1) #(batch_size, 321408, 7) 乘以box每一项的权重 loss = self.smooth_l1_loss(diff, self.beta) # anchor-wise weighting if weights is not None: assert weights.shape[0] == loss.shape[0] and weights.shape[1] == loss.shape[1] # weights参数使用正anchor数目进行平均,使得每个样本的损失与样本中目标的数量无关 loss = loss * weights.unsqueeze(-1) return loss方向分类的target获取 减去dir_offset(45度)的原因可以参考这个issue:get_direction_target@dir_offset · Issue #80 · open-mmlab/OpenPCDet · GitHub 说的呢就是因为大部分目标都集中在0度和180度,270度和90度, 这样就会导致网络在这些角度的物体的预测上面不停的摇摆。所以为了解决这个问题, 将方向分类的角度判断减去45度再进行判断,如下图所示。 这里减掉45度之后,在预测推理的时候,同样预测的角度解码之后 也要减去45度再进行之后测nms等操作。 下图来自FCOS3D论文 FCOS3D:https://arxiv.org/pdf/2104.10956.pdf

至此SECOND的网络构建和LOSS计算就完成了,下面看一下SECOND的推理过程。 10、推理实现代码回到:pcdet/models/dense_heads/anchor_head_single.py # 如果不是训练模式,则直接生成进行box的预测 if not self.training or self.predict_boxes_when_training: # 根据预测结果解码生成最终结果 batch_cls_preds, batch_box_preds = self.generate_predicted_boxes( batch_size=data_dict['batch_size'], cls_preds=cls_preds, box_preds=box_preds, dir_cls_preds=dir_cls_preds ) data_dict['batch_cls_preds'] = batch_cls_preds # (1, 211200, 3) 70400*3=211200 data_dict['batch_box_preds'] = batch_box_preds # (1, 211200, 7) data_dict['cls_preds_normalized'] = False return data_dict推理模式下,在预测出每个anchor的分类和回归后,需要根据anchor和预测的结果进行解码操作,再进行NMS去除冗余测检测框。 10.1 预测结果解码: def generate_predicted_boxes(self, batch_size, cls_preds, box_preds, dir_cls_preds=None): """ Args: batch_size: cls_preds: (N, H, W, C1) box_preds: (N, H, W, C2) dir_cls_preds: (N, H, W, C3) Returns: batch_cls_preds: (B, num_boxes, num_classes) batch_box_preds: (B, num_boxes, 7+C) """ if isinstance(self.anchors, list): # 是否使用多头预测,默认否 if self.use_multihead: anchors = torch.cat([anchor.permute(3, 4, 0, 1, 2, 5).contiguous().view(-1, anchor.shape[-1]) for anchor in self.anchors], dim=0) else: """ 每个类别anchor的生成情况: [(Z, Y, X, anchor尺度, 该尺度anchor方向, 7个回归参数) (Z, Y, X, anchor尺度, 该尺度anchor方向, 7个回归参数) (Z, Y, X, anchor尺度, 该尺度anchor方向, 7个回归参数)] 在倒数第三个维度拼接 anchors 维度 (Z, Y, X, 3个anchor尺度, 每个尺度两个方向, 7) (1, 248, 216, 3, 2, 7) """ anchors = torch.cat(self.anchors, dim=-3) else: anchors = self.anchors # 计算一共有多少个anchor Z*Y*X*num_of_anchor_scale*anchor_rot num_anchors = anchors.view(-1, anchors.shape[-1]).shape[0] # (batch_size, Z*Y*X*num_of_anchor_scale*anchor_rot, 7) batch_anchors = anchors.view(1, -1, anchors.shape[-1]).repeat(batch_size, 1, 1) # 将预测结果都flatten为一维的 # (batch_size, Z*Y*X*num_of_anchor_scale*anchor_rot, 3) batch_cls_preds = cls_preds.view(batch_size, num_anchors, -1).float() \ if not isinstance(cls_preds, list) else cls_preds # (batch_size, Z*Y*X*num_of_anchor_scale*anchor_rot, 7) batch_box_preds = box_preds.view(batch_size, num_anchors, -1) if not isinstance(box_preds, list) \ else torch.cat(box_preds, dim=1).view(batch_size, num_anchors, -1) # 对7个预测的box参数进行解码操作 batch_box_preds = self.box_coder.decode_torch(batch_box_preds, batch_anchors) # 每个anchor的方向预测 if dir_cls_preds is not None: # 0.78539 方向偏移 dir_offset = self.model_cfg.DIR_OFFSET # 0 dir_limit_offset = self.model_cfg.DIR_LIMIT_OFFSET # 0 # 将方向预测结果flatten为一维的 (batch_size, Z*Y*X*num_of_anchor_scale*anchor_rot, 2) dir_cls_preds = dir_cls_preds.view(batch_size, num_anchors, -1) if not isinstance(dir_cls_preds, list) \ else torch.cat(dir_cls_preds, dim=1).view(batch_size, num_anchors, -1) # (1, 321408, 2) # (batch_size, Z*Y*X*num_of_anchor_scale*anchor_rot) # 取出所有anchor的方向分类 : 正向和反向 dir_labels = torch.max(dir_cls_preds, dim=-1)[1] # pi period = (2 * np.pi / self.model_cfg.NUM_DIR_BINS) # 将角度在0到pi之间 在OpenPCDet中,坐标使用的是统一规范坐标,x向前,y向左,z向上 # 这里参考训练时候的原因,现将角度角度沿着x轴的逆时针旋转了45度得到dir_rot dir_rot = common_utils.limit_period( batch_box_preds[..., 6] - dir_offset, dir_limit_offset, period ) """ 从新将角度旋转回到激光雷达坐标系中,所以需要加回来之前减去的45度, 如果dir_labels是1的话,说明方向在是180度的,因此需要将预测的角度信息加上180度, 否则预测角度即是所得角度 """ batch_box_preds[..., 6] = dir_rot + dir_offset + period * dir_labels.to(batch_box_preds.dtype) # PointPillars、SECOND中无此项 if isinstance(self.box_coder, box_coder_utils.PreviousResidualDecoder): batch_box_preds[..., 6] = common_utils.limit_period( -(batch_box_preds[..., 6] + np.pi / 2), offset=0.5, period=np.pi * 2 ) return batch_cls_preds, batch_box_preds decode_torch BOX解码操作为编码的逆运算代码在:pcdet/utils/box_coder_utils.py class ResidualCoder(object): def __init__(self, code_size=7, encode_angle_by_sincos=False, **kwargs): """ loss中anchor和gt的编码与解码 7个参数的编码的方式为 ∆x = (x^gt − xa^da)/d^a , ∆y = (y^gt − ya^da)/d^a , ∆z = (z^gt − za^ha)/h^a ∆w = log (w^gt / w^a) ∆l = log (l^gt / l^a) , ∆h = log (h^gt / h^a) ∆θ = sin(θ^gt - θ^a) """ super().__init__() self.code_size = code_size self.encode_angle_by_sincos = encode_angle_by_sincos if self.encode_angle_by_sincos: self.code_size += 1 def decode_torch(self, box_encodings, anchors): """ Args: box_encodings: (B, N, 7 + C) or (N, 7 + C) [x, y, z, dx, dy, dz, heading or *[cos, sin], ...] anchors: (B, N, 7 + C) or (N, 7 + C) [x, y, z, dx, dy, dz, heading, ...] Returns: """ # 这里指torch.split的第二个参数 torch.split(tensor, split_size, dim=) # split_size是切分后每块的大小,不是切分为多少块!,多余的参数使用*cags接收 xa, ya, za, dxa, dya, dza, ra, *cas = torch.split(anchors, 1, dim=-1) # 分割编码后的box PointPillar为False if not self.encode_angle_by_sincos: xt, yt, zt, dxt, dyt, dzt, rt, *cts = torch.split(box_encodings, 1, dim=-1) else: xt, yt, zt, dxt, dyt, dzt, cost, sint, *cts = torch.split(box_encodings, 1, dim=-1) # 计算anchor对角线长度 diagonal = torch.sqrt(dxa ** 2 + dya ** 2) # (B, N, 1)-->(1, 321408, 1) # loss计算中anchor与GT编码的运算:g表示gt,a表示anchor # ∆x = (x^gt − xa^da)/diagonal --> x^gt = ∆x * diagonal + x^da # 下同 xg = xt * diagonal + xa yg = yt * diagonal + ya zg = zt * dza + za # ∆l = log(l^gt / l^a)的逆运算 --> l^gt = exp(∆l) * l^a # 下同 dxg = torch.exp(dxt) * dxa dyg = torch.exp(dyt) * dya dzg = torch.exp(dzt) * dza # 如果角度是cos和sin编码,采用新的解码方式 PointPillar为False if self.encode_angle_by_sincos: rg_cos = cost + torch.cos(ra) rg_sin = sint + torch.sin(ra) rg = torch.atan2(rg_sin, rg_cos) else: # rts = [rg - ra] 角度的逆运算 rg = rt + ra # PointPillar无此项 cgs = [t + a for t, a in zip(cts, cas)] return torch.cat([xg, yg, zg, dxg, dyg, dzg, rg, *cgs], dim=-1) 10.2 无类别NMS在3D的环境中,不考虑不同类别的物体会出现在同一处3D的空间中。 代码在:pcdet/models/detectors/detector3d_template.py def post_processing(self, batch_dict): """ Args: batch_dict: batch_size: batch_cls_preds: (B, num_boxes, num_classes | 1) or (N1+N2+..., num_classes | 1) or [(B, num_boxes, num_class1), (B, num_boxes, num_class2) ...] multihead_label_mapping: [(num_class1), (num_class2), ...] batch_box_preds: (B, num_boxes, 7+C) or (N1+N2+..., 7+C) cls_preds_normalized: indicate whether batch_cls_preds is normalized batch_index: optional (N1+N2+...) has_class_labels: True/False roi_labels: (B, num_rois) 1 .. num_classes batch_pred_labels: (B, num_boxes, 1) Returns: """ # post_process_cfg后处理参数,包含了nms类型、阈值、使用的设备、nms后最多保留的结果和输出的置信度等设置 post_process_cfg = self.model_cfg.POST_PROCESSING # 推理默认为1 batch_size = batch_dict['batch_size'] # 保留计算recall的字典 recall_dict = {} # 预测结果存放在此 pred_dicts = [] # 逐帧进行处理 for index in range(batch_size): if batch_dict.get('batch_index', None) is not None: assert batch_dict['batch_box_preds'].shape.__len__() == 2 batch_mask = (batch_dict['batch_index'] == index) else: assert batch_dict['batch_box_preds'].shape.__len__() == 3 # 得到当前处理的是第几帧 batch_mask = index # box_preds shape (所有anchor的数量, 7) box_preds = batch_dict['batch_box_preds'][batch_mask] # 复制后,用于recall计算 src_box_preds = box_preds if not isinstance(batch_dict['batch_cls_preds'], list): # (所有anchor的数量, 3) cls_preds = batch_dict['batch_cls_preds'][batch_mask] # 同上 src_cls_preds = cls_preds assert cls_preds.shape[1] in [1, self.num_class] if not batch_dict['cls_preds_normalized']: # 损失函数计算使用的BCE,所以这里使用sigmoid激活函数得到类别概率 cls_preds = torch.sigmoid(cls_preds) else: cls_preds = [x[batch_mask] for x in batch_dict['batch_cls_preds']] src_cls_preds = cls_preds if not batch_dict['cls_preds_normalized']: cls_preds = [torch.sigmoid(x) for x in cls_preds] # 是否使用多类别的NMS计算,否,不考虑不同类别的物体会在3D空间中重叠 if post_process_cfg.NMS_CONFIG.MULTI_CLASSES_NMS: if not isinstance(cls_preds, list): cls_preds = [cls_preds] multihead_label_mapping = [torch.arange(1, self.num_class, device=cls_preds[0].device)] else: multihead_label_mapping = batch_dict['multihead_label_mapping'] cur_start_idx = 0 pred_scores, pred_labels, pred_boxes = [], [], [] for cur_cls_preds, cur_label_mapping in zip(cls_preds, multihead_label_mapping): assert cur_cls_preds.shape[1] == len(cur_label_mapping) cur_box_preds = box_preds[cur_start_idx: cur_start_idx + cur_cls_preds.shape[0]] cur_pred_scores, cur_pred_labels, cur_pred_boxes = model_nms_utils.multi_classes_nms( cls_scores=cur_cls_preds, box_preds=cur_box_preds, nms_config=post_process_cfg.NMS_CONFIG, score_thresh=post_process_cfg.SCORE_THRESH ) cur_pred_labels = cur_label_mapping[cur_pred_labels] pred_scores.append(cur_pred_scores) pred_labels.append(cur_pred_labels) pred_boxes.append(cur_pred_boxes) cur_start_idx += cur_cls_preds.shape[0] final_scores = torch.cat(pred_scores, dim=0) final_labels = torch.cat(pred_labels, dim=0) final_boxes = torch.cat(pred_boxes, dim=0) else: # 得到类别预测的最大概率,和对应的索引值 cls_preds, label_preds = torch.max(cls_preds, dim=-1) if batch_dict.get('has_class_labels', False): # 如果有roi_labels在里面字典里面, # 使用第一阶段预测的label为改预测结果的分类类别 label_key = 'roi_labels' if 'roi_labels' in batch_dict else 'batch_pred_labels' label_preds = batch_dict[label_key][index] else: # 类别预测值加1 label_preds = label_preds + 1 # 无类别NMS操作 # selected : 返回了被留下来的anchor索引 # selected_scores : 返回了被留下来的anchor的置信度分数 selected, selected_scores = model_nms_utils.class_agnostic_nms( # 每个anchor的类别预测概率和anchor回归参数 box_scores=cls_preds, box_preds=box_preds, nms_config=post_process_cfg.NMS_CONFIG, score_thresh=post_process_cfg.SCORE_THRESH ) # 无此项 if post_process_cfg.OUTPUT_RAW_SCORE: max_cls_preds, _ = torch.max(src_cls_preds, dim=-1) selected_scores = max_cls_preds[selected] # 得到最终类别预测的分数 final_scores = selected_scores # 根据selected得到最终类别预测的结果 final_labels = label_preds[selected] # 根据selected得到最终box回归的结果 final_boxes = box_preds[selected] # 如果没有GT的标签在batch_dict中,就不会计算recall值 recall_dict = self.generate_recall_record( box_preds=final_boxes if 'rois' not in batch_dict else src_box_preds, recall_dict=recall_dict, batch_index=index, data_dict=batch_dict, thresh_list=post_process_cfg.RECALL_THRESH_LIST ) # 生成最终预测的结果字典 record_dict = { 'pred_boxes': final_boxes, 'pred_scores': final_scores, 'pred_labels': final_labels } pred_dicts.append(record_dict) return pred_dicts, recall_dict 11、结果和数据增强 11.1 模型结果和可视化由于SECOND的最新实现中,改动较多,这里直接展示它在PCDET的KITTI数据集上结果。 SECOND在KITTI数据集测试结果(结果仅显示在kitti验证集moderate精度)

2号彩色相机

点云检测结果 11.2 数据增强 1、SECOND提出了对GT进行采样截取,生成GT的Database,该方法在后续的很多网络中都得到了使用。(后续被称为GT_AUG) 首先,先对数据集中的标签和对应的box内的点云数据进行截取来创建database 其次,在训练的点云帧上随机从database中采样一部分GT,放入该帧中 对所有的GT进行碰撞检测,防止放入的GT会相互碰撞,产生不可能在物理世界中出现的结果,并将碰撞的采样GT删除。 该方法的提出极大的加速了网络的收敛速度和提升了网络最终的精度,下图展示了使用该方法和不使用该方法的对比图。

注:该方法很好,但是只能用在单独的点云检测模型中,如何将该方法用在融合模型上是值得思考的! 2、全局点云旋转和缩放,旋转角度为(-45,45)之间,缩放大小为(0.95,1.05)之间 3、独立GT移动和旋转,该方法来自VoxelNet,随机对独立的GTBox进行旋转和移动。 至于本论文的消融实验,个人觉得讲的不是很好,一个讲了上面的GT_AUG,结论就是上面那副图片;另一个将了对角度编码的改进,提升了一点精度;这里就不讲这个了,放张图,大家自己看看吧。

vector是AVOD中的角度编码实现。 参考文章或文献: 1、【3D目标检测】SECOND算法解析 - 知乎 2、Sensors | Free Full-Text | SECOND: Sparsely Embedded Convolutional Detection 3、GitHub - open-mmlab/OpenPCDet: OpenPCDet Toolbox for LiDAR-based 3D Object Detection. 4、GitHub - jjw-DL/OpenPCDet-Noted: OpenPCDet代码分析与注释 5、VoxelNet点云检测详解_NNNNNathan的博客-CSDN博客_voxelnet 点云 目标检测 |

【本文地址】

今日新闻 |

推荐新闻 |